Kubernetes is a tool or a system for automating the deployment of containerized applications. In a loose analogy, one can say what Chef is for infrastructure deployments, Kubernetes is for container deployments.

History of Kubernetes

It was part of Google’s internal tool system called “Borg” which was later made as Open-source in 2015. Internal codename of this project was “Seven” and is reflected in the logo of this system.

Kubernetes has been maintained by Cloud Native Computing Foundation which is a collaboration between Google and Linux Foundation.

What does Kubernete offer?

It offers to manage the “immutable infrastructure” in an automated fashion. Typically, containers are launched using images which do not change. If you want to make changes to the software piece which is part of the container, you simply update the image, launch new containers and throw away the older containers. Kubernetes allows you to do this efficiently.

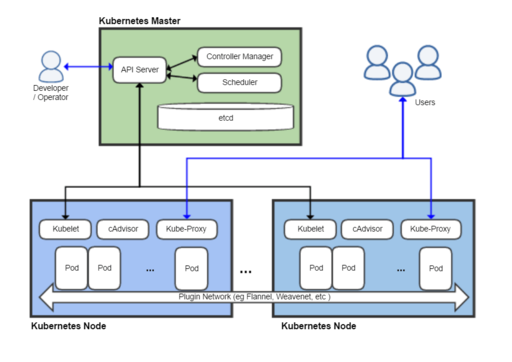

The lowest level abstraction that Kubernete provides is called a “Pod”. It consists of one or more containers which can run on a same “node”. A Node is a server or VM on which Kubernetes cluster is running. The containers in a Pod could be sharing resources and can communicate with each other. This allows running tightly knit services to be run from the same Pod.

In Kubernetes configuration, Pods are not updated but are simply destroyed. New Pods are created when any of the containers are required to be updated.

“Deployments” are something that runs the Kubernetes cluster. They store the configuration, dependencies, resource requirements and access etc. Kubernete also has a “ReplicationController” which allows you to replicate the Pods as per the need.

Leading cloud providers like AWS, Microsoft and Google provide the managed Kuberenets services viz Amazon Elastic Container Service (Amazon EKS), Azure Container Service (AKS), Google Kubernetes Engine(GKE).

Related Links

Related Keywords

Docker, Container, DevOps, Chef, Puppet