Serverless Computing is a computing model where servers are not used. Are you kidding me? You are right. This is not a case. Serverless Computing is actually a misnomer. What it really means is developers are freed from managing the servers and are free to focus on their code/application to be executed in the cloud.

So, how does Serverless Computing really work?

In this computational model, you only need to specify minimum system requirements such as RAM. Based on that the cloud provider would provision the required resources such as CPU and network bandwidth. Whenever you need to execute your code, you hit the endpoint and your code is executed. In the background, the cloud provider, provisions the required resources, executes the code and releases the allocated resources. This allows cloud provider to use the infrastructure for other customers when they are idle. This also gives out the benefit to the customers as they are not charged for the idle time. Win-Win situation for both, isn’t it?

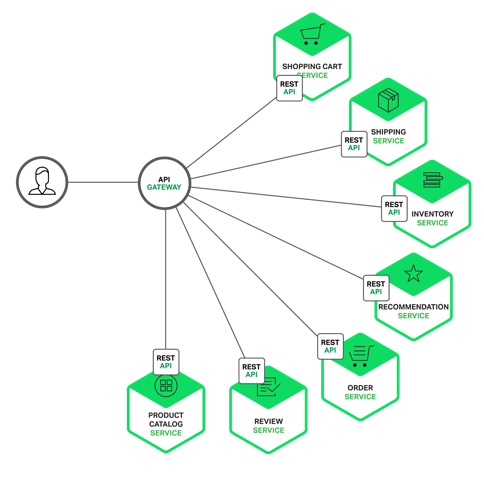

Serverless Computing is popular in microservices architecture. The small pieces of code which form the microservices can be executed using Serverless components very easily. On top of it, cloud provider also handles the scaling up. So if there are more customers hitting your serverless endpoint, cloud provider would automatically allocate more resources and get the code executed.

Any precautions to be taken?

Absolutely. Every developer needs to keep few things in mind while developing code for Serverless computing:

- The code can’t have too many initiations steps, else it will add to the latency.

- The code is executed in parallel. So there shouldn’t be any interdependency which would leave the application or data in the incoherent state.

- You don’t have access to the instance. So you can’t really assume anything about the hardware.

- You don’t have access to local storage. You need to store all your data in some shared location or central cache.

So who all provide this option?

- AWS (obviously!!) was the first one to provide this (2014) – AWS Lambda.

- Microsoft Azure provides Azure Functions

- Google Cloud provides Google Cloud Functions

- IBM has OpenWhisk which is open source serverless platform

Support for languages varies from provider to provider. However, all of these offerings support Node.js. Among other languages, Python, Java are more popular.

Related Links

- Wikipedia

- AWS Lambda

- Azure Functions

- Google Cloud Functions

- Getting Started with Serverless Architecture – Slideshare presentation

Related Keywords

Serverless Architecture, API Gateway, AWS S3, Microservices Architecture

![Type-1 and type-2 hypervisor - By Scsami (Own work) [CC0], via Wikimedia Commons](https://upload.wikimedia.org/wikipedia/commons/e/e1/Hyperviseur.png)